What is GraphRAG ? Everything You Need To Know

.png)

You know how LLMs (Large Language Models) can sometimes spit out answers that are just plain wrong or outdated? That’s what we call “hallucination.” It happens when the model doesn’t have all the facts, so it fills in the gaps with whatever seems to fit, even if it’s not true. These hallucinations might sound convincing but aren’t actually based on reality. They mess things up when the training data isn’t fresh and accurate.

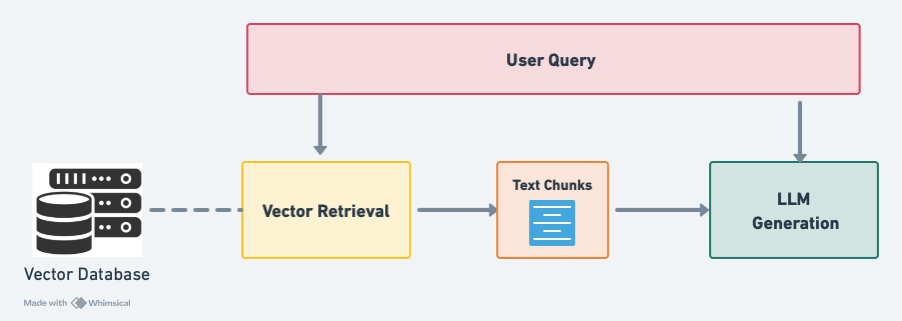

That’s where RAG (Retrieval Augmented Generation) comes into play. It brings factual knowledge into LLMs by connecting to an external data source. But traditional vector-based RAG still has its limits: it breaks down data into text chunks and retrieves these pieces independently, which can lead to missing the relationships between pieces of information and lacking a global view.

GraphRAG takes this a step further. Instead of just pulling in isolated facts, it retrieves relevant graph elements—like nodes or paths—from a pre-built graph knowledge source. By considering how different pieces of information are connected, GraphRAG generates more accurate and contextually richer responses. Plus, it helps make the input data to LLMs shorter and more focused by summarizing and abstracting data through the graph structure.

What Is RAG in (LLM)?

RAG, or Retrieval Augmented Generation, helps LLM generation by pulling in the latest facts from an external source and feed those retrieved information into the LLM. This way, LLMs can give you more accurate and up-to-date answers without going off the rails or needing a full retraining. It’s like having a reliable source of truth to ground the LLM responses.

Dechipering RAG

Now that you know RAG pulls in real-time information to help LLMs stay accurate, let’s break it down into the two main stages that make it work.

First, there’s the Retrieval Stage. This is where RAG goes out and grabs pieces of information from an external data source, like a database or knowledge base. Think of it like sending out a search party to bring back the most relevant snippets of text for your question. It’s super helpful because instead of relying on what the model already knows (which might be outdated), RAG pulls in fresh, up-to-date info. But here’s the catch—traditional RAG doesn’t always pay attention to how these pieces of information are connected. It just grabs individual chunks without worrying too much about the relationships between them. So, you get the info, but the bigger picture might be missing.

Next comes the Generation Stage. Once RAG has those snippets, it hands them over to the LLM, which then tries to piece everything together into a final answer. The model uses its language understanding to stitch and reason over these bits of information into something that makes sense.

Problem with traditional RAG

The first issue is that RAG neglects relationships. It’s great at pulling in relevant info based on what you ask, but it completely misses how things are connected. For example, imagine you’re shopping online. Traditional RAG might help you find products that match your search, but it neglects the relationships between those products—like if they’re part of the same bundle or if people who bought one also bought another. Those connections can be really important, but traditional RAG just doesn’t catch them.

Another major problem is that RAG struggles to see the big picture. It can only grab a handful of documents at a time, so it might miss out on crucial global information. It’s like trying to piece together a puzzle with only a few pieces—sometimes you just don’t get the full story.

Finally, RAG can sometimes get too wordy. It pulls in lots of text snippets and throws them all together, which can make your context really long and confusing. This often leads to a “needle in a haystack” situation where the important stuff gets lost by LLMs.

What is GraphRAG?

GraphRAG takes RAG to the next level. Instead of just pulling in chunks of text like traditional RAG, GraphRAG dives into a web of relationships—a knowledge graph where everything’s connected. So when you ask a question, GraphRAG doesn’t just find the answer; it understands how different pieces of information are linked to give you a smarter, more complete answer.

Picture this: you’re trying to summarize why a big event, like a company merger, went down the way it did. Traditional RAG might dig up reports about each company, the merger process, and some outcomes. But GraphRAG? It’s way more clever. It doesn’t just throw facts at you—it connects the dots. It shows you how the leadership styles of the companies meshed, how market conditions nudged things along, and how the customer bases blended together. Instead of a jumble of facts, you get a clear, connected story that really explains what happened.

And here’s the cool part: GraphRAG doesn’t just give you a pile of text—it hands you the full picture, neatly connected. When you need to summarize something complex, like why a merger succeeded, GraphRAG pulls in everything relevant and shows you how it all fits together. It’s like getting the whole story in one shot, with all the juicy connections that make it make sense.

Overview of GraphRAG

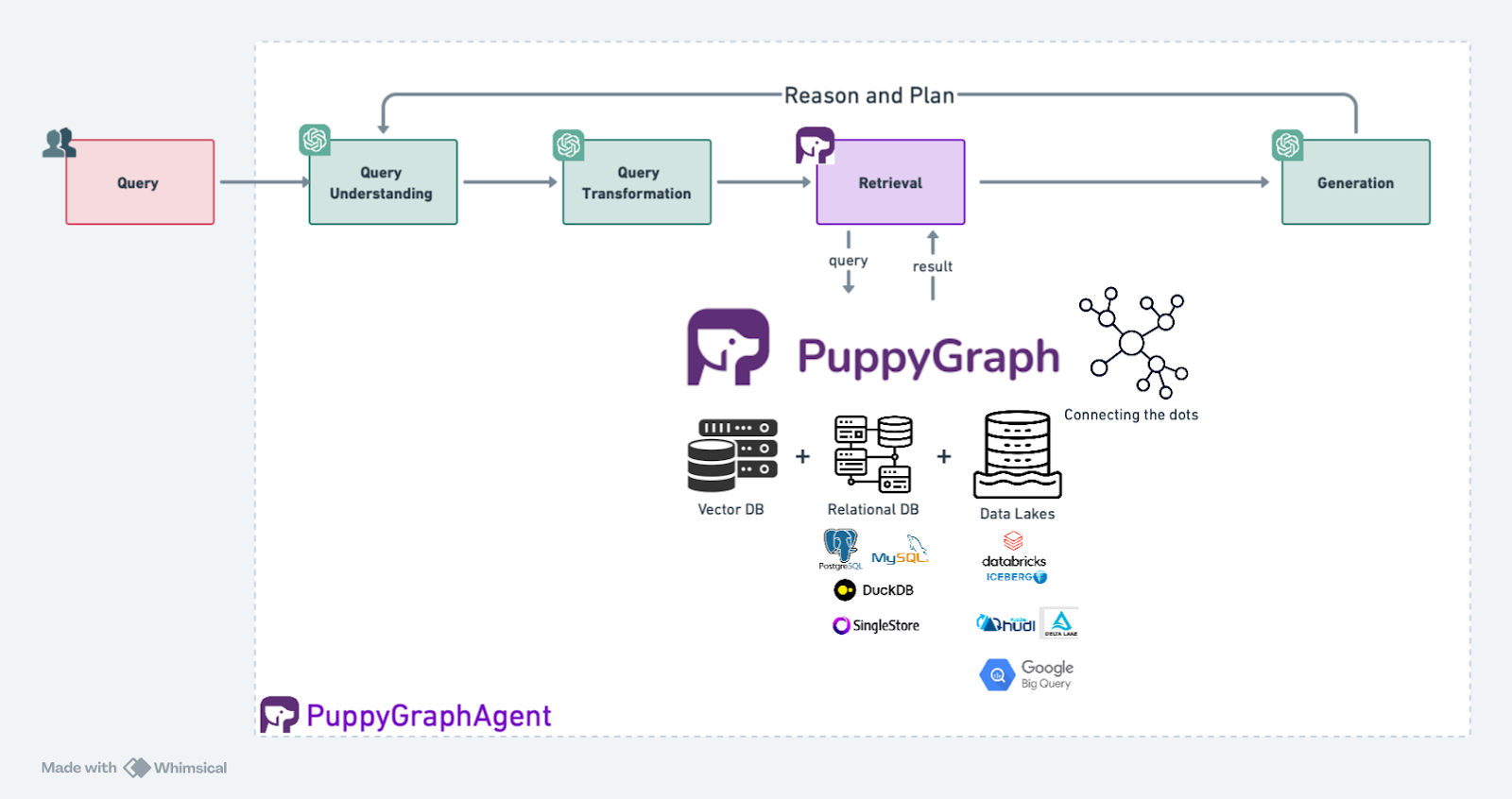

GraphRAG operates in three stages: Graph Sourcing, Graph Retrieval, and LLM Generation. First, in Graph Sourcing, we organize and prepare the graph data. This doesn’t require a dedicated graph database—thanks to tools like PuppyGraph, we can map any relational database into a graph structure without needing to move the data.

Next, in Graph Retrieval, we extract the most relevant pieces of this graph data using graph query languages like Gremlin or Cypher. The retrieved information can be either a subgraph or text, depending on the query. Finally, in LLM Generation, instead of just using the retrieved text directly like traditional RAG, GraphRAG leverages the retrieved graph information to generate better, more accurate results.

.png)

Exploring Diverse Types of GraphRAG

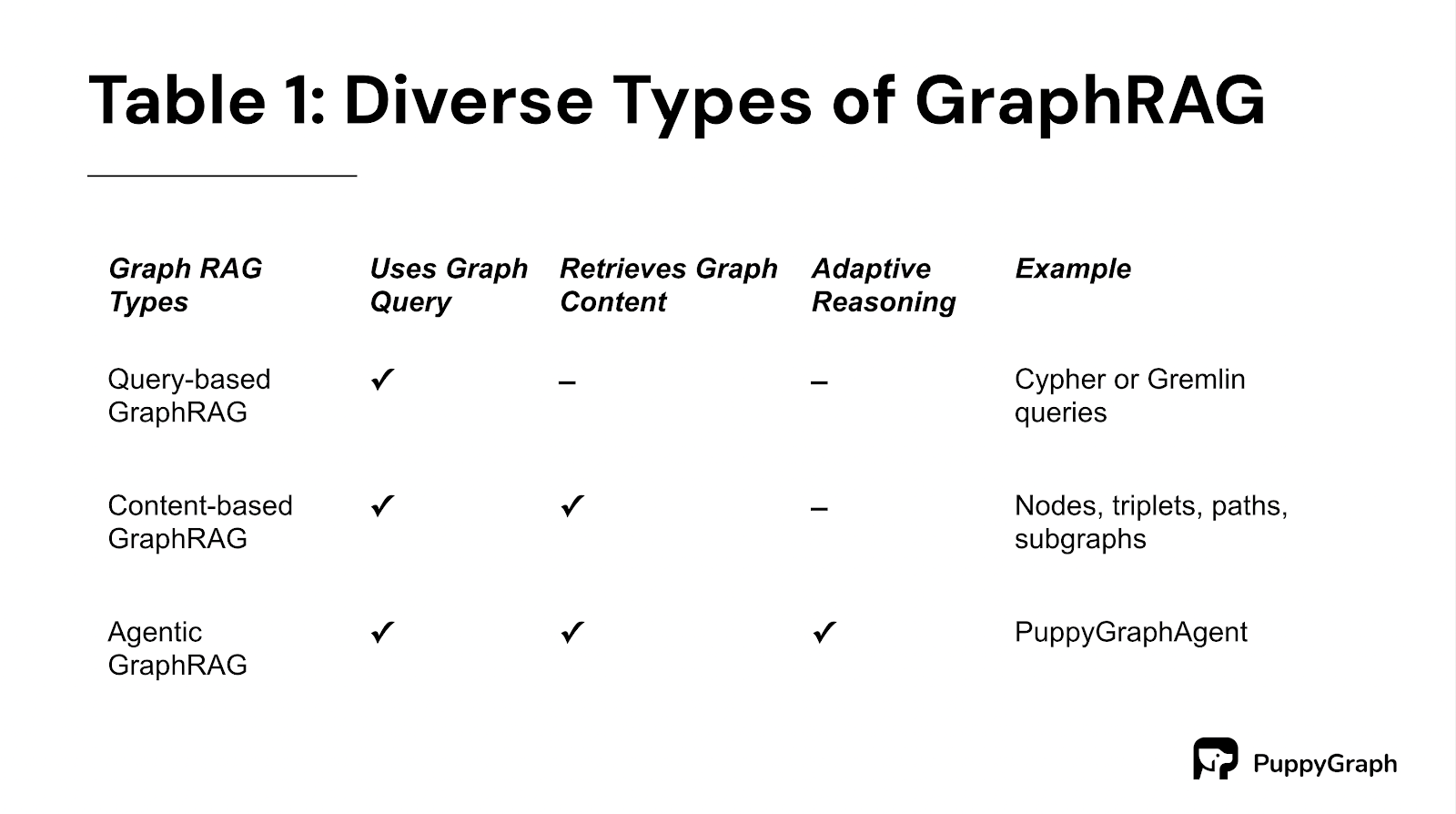

There are different types of GraphRAG based on different domains and applications, we summarize as follows:

Query-based GraphRAG: This approach starts by transforming your natural language question into a graph-based query such as Gremlin or Cypher. Then graph engine runs this query against the graph. Finally, it feeds these results into the LLM, which uses them to generate a final answer.

Content-based GraphRAG: Instead of just running a query, this type focuses on retrieving different layers of graph content—things like individual nodes, relationships (triplets), paths through the graph, or even some subgraphs. These various elements of the graph are then fed into the LLM, allowing it to consider multiple angles and nuances to generate a more informed and comprehensive answer.

Agentic GraphRAG: This is where things get really interesting. In Agentic GraphRAG, the graph retrieval process becomes a tool in the hands of an LLM-based AI agent. The agent doesn’t just passively use the graph information—it actively plans and reasons with it, adapting its approach to come up with the best possible answer. With tools like PuppyGraph’s open-source library, PuppyGraphAgent, setting up this kind of dynamic and intelligent system becomes much more accessible.

This variety in GraphRAG approaches means it can be tailored to fit the specific needs and complexities of different tasks, providing flexibility and depth that go beyond traditional RAG methods.

Executing GraphRAG: A Step-by-Step Guide

Prerequisites

Follow the guide https://docs.puppygraph.com/getting-started/querying-postgresql-data-as-a-graph#deployment to install both PostgreSQL and PuppyGraph.

We will prepare following necessary softwares:

- PostgreSQL: Serves our relational database to hold the actual data. You can also choose any of your favourite database

- PuppyGraph: Enables Graph Query on top the relationship database. The previous instruction starts puppygraph as well. If you would like to run it separately, follow the guide here https://docs.puppygraph.com/getting-started/launching-puppygraph-in-docker to install.

Python Libraries: Enables the actual GraphRAG

# Contains puppygraph client and puppygraph agent

pip3 install puppygraph

# Langchain version of OpenAI wrapper

pip3 install langchain-openaiOpenAI Access Token: Make sure OPENAI_API_KEY is set as an environment variable.

Load Dataset into PostgreSQL

We will store the imdb dataset into the PostgreSQL first.

Download the dataset

Download the dataset from IMDB with these two links and unarchive them. This will download around 1GB data from the Internet.

wget https://datasets.imdbws.com/name.basics.tsv.gz

wget https://datasets.imdbws.com/title.principals.tsv.gz

wget https://datasets.imdbws.com/title.basics.tsv.gz

gzip -d name.basics.tsv.gz

gzip -d title.principals.tsv.gz

gzip -d title.basics.tsv.gz

docker cp name.basics.tsv postgres:/name.basics.tsv

docker cp title.principals.tsv postgres:/title.principals.tsv

docker cp title.basics.tsv postgres:/title.basics.tsv

Load the dataset into PostgreSQL

Once we have the dataset, we first define the tables in PostgreSQL where the data will be stored. Start a Postgres shell by running the following command, input the password (by default postgres123 ) and then create tables in the database.

docker exec -it postgres psql -h postgres -U postgresSQL for data preparation in PostgreSQL

CREATE TABLE name_basics (

nconst TEXT PRIMARY KEY,

primaryName TEXT,

birthYear INTEGER,

deathYear INTEGER,

primaryProfession TEXT[], -- Array to store CSV values

knownForTitles TEXT[] -- Array to store CSV values

);

CREATE TEMP TABLE tmp_name_basics (

nconst TEXT,

primaryName TEXT,

birthYear INTEGER,

deathYear INTEGER,

primaryProfession TEXT, -- Temporarily store as TEXT

knownForTitles TEXT -- Temporarily store as TEXT

);

\copy tmp_name_basics FROM '/name.basics.tsv' WITH (FORMAT csv, DELIMITER E'\t', HEADER true, NULL '\N', QUOTE E'\b');

INSERT INTO name_basics (nconst, primaryName, birthYear, deathYear, primaryProfession, knownForTitles)

SELECT

nconst,

primaryName,

birthYear,

deathYear,

string_to_array(primaryProfession, ',')::TEXT[], -- Convert CSV text to array

string_to_array(knownForTitles, ',')::TEXT[] -- Convert CSV text to array

FROM

tmp_name_basics;

CREATE TABLE title_basics (

tconst TEXT PRIMARY KEY,

titleType TEXT,

primaryTitle TEXT,

originalTitle TEXT,

isAdult BOOLEAN,

startYear INTEGER,

endYear INTEGER,

runtimeMinutes INTEGER,

genres TEXT[] -- Array to store CSV values

);

CREATE TEMP TABLE tmp_title_basics (

tconst TEXT PRIMARY KEY,

titleType TEXT,

primaryTitle TEXT,

originalTitle TEXT,

isAdult TEXT,

startYear INTEGER,

endYear INTEGER,

runtimeMinutes INTEGER,

genres TEXT

);

\COPY tmp_title_basics FROM '/title.basics.tsv' WITH (FORMAT csv, DELIMITER E'\t', HEADER true, NULL '\N', QUOTE E'\b');

INSERT INTO title_basics (tconst, titleType, primaryTitle, originalTitle, isAdult, startYear, endYear, runtimeMinutes, genres)

SELECT

tconst,

titleType,

primaryTitle,

originalTitle,

CASE isAdult WHEN '0' THEN FALSE ELSE TRUE END AS isAdult, -- Convert text to boolean

startYear,

endYear,

runtimeMinutes,

string_to_array(genres, ',') AS genres -- Convert CSV text to array

FROM tmp_title_basics;

CREATE TABLE title_principals (

tconst TEXT,

ordering INTEGER,

nconst TEXT,

category TEXT,

job TEXT,

characters TEXT,

primary key (tconst, ordering)

);

\COPY title_principals FROM '/title.principals.tsv' WITH (FORMAT csv, DELIMITER E'\t', HEADER true, NULL '\N',QUOTE E'\b');The process loads raw TSV files into temporary tables and then perform some data transformation and cleanup to get the data tables.

Converting PostgreSQL tables to a Graph with PuppyGraph

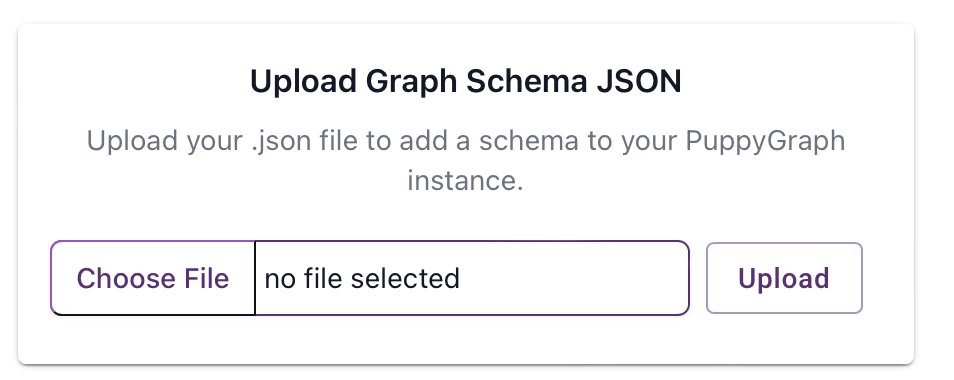

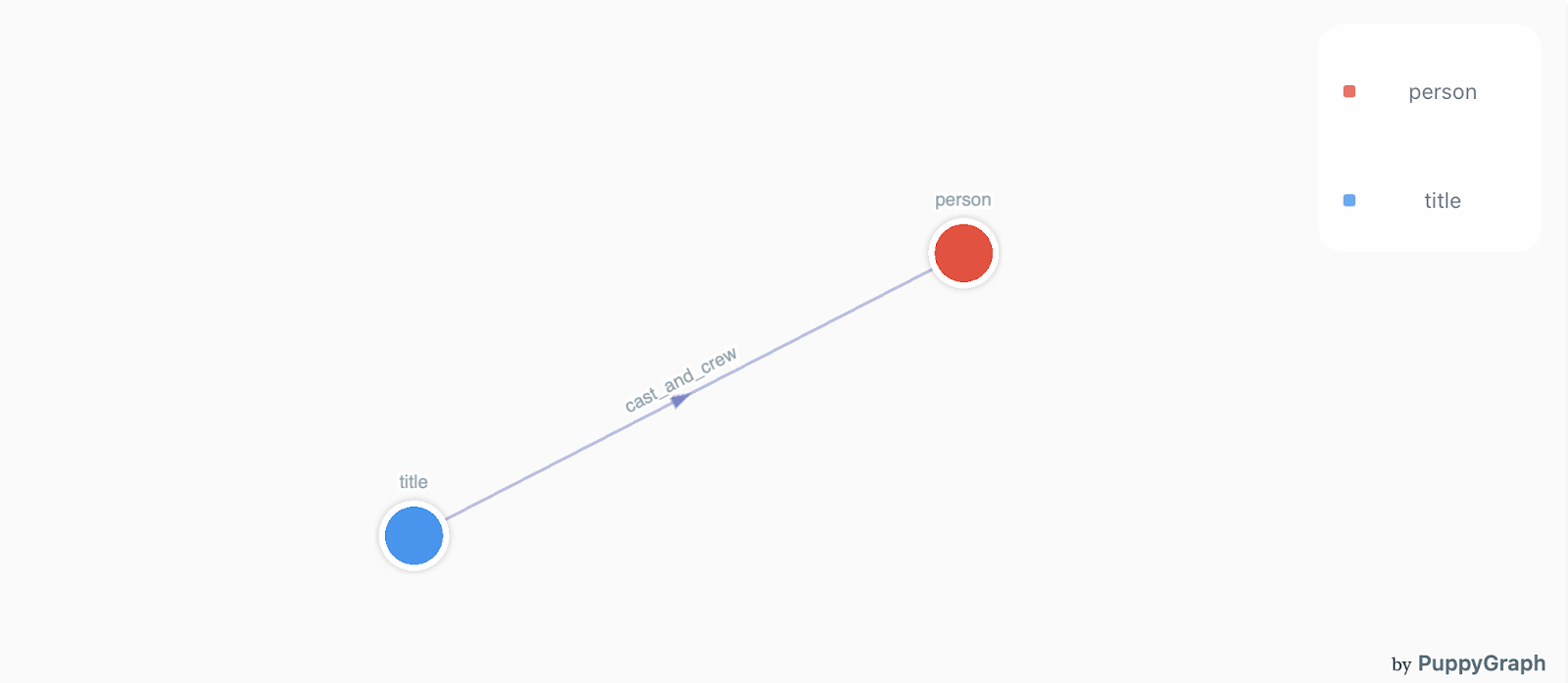

We used the following schema to create the graph within PuppyGraph. The schema contains 2 different types of nodes: failure_type, asset, and work_order. As well as 1 type of relationship: cast_and_crew. Each of the nodes and relationships is powered by one or more tables in PostgreSQL. PuppyGraph provides a graphical schema builder for users to define the mapping.

- Open PuppyGraph web (http://localhost:8081), in the schema tab, use the Upload Graph Schema JSON

- Upload the JSON schema, make sure to use the correct user name, password and jdbc uri for your PostgreSQL.

{

"catalogs": [{ "name": "imdb", "type": "postgresql", "jdbc": { ... } }],

"graph": {

"vertices": [{ "label": "person", "oneToOne": { ... } }, { "label": "title", "oneToOne": { ... } }],

"edges": [{ "label": "cast_and_crew", "fromVertex": "title", "toVertex": "person", "tableSource": { ... } }]

}

}This is a highly abbreviated version of the schema, retaining only the most critical elements. For the full schema, please refer to the following:

{

"catalogs": [

{

"name": "imdb",

"type": "postgresql",

"jdbc": {

"username": "postgres",

"password": "postgres123",

"jdbcUri": "jdbc:postgresql://postgres:5432/postgres",

"driverClass": "org.postgresql.Driver"

}

}

],

"graph": {

"vertices": [

{

"label": "person",

"oneToOne": {

"tableSource": {

"catalog": "imdb",

"schema": "public",

"table": "name_basics"

},

"id": {

"fields": [

{

"type": "String",

"field": "nconst",

"alias": "nconst"

}

]

},

"attributes": [

{

"type": "String",

"field": "primaryName",

"alias": "primaryName"

},

{

"type": "Int",

"field": "birthYear",

"alias": "birthYear"

},

{

"type": "Int",

"field": "deathYear",

"alias": "deathYear"

}

]

}

},

{

"label": "title",

"oneToOne": {

"tableSource": {

"catalog": "imdb",

"schema": "public",

"table": "title_basics"

},

"id": {

"fields": [

{

"type": "String",

"field": "tconst",

"alias": "tconst"

}

]

},

"attributes": [

{

"type": "String",

"field": "titleType",

"alias": "titleType"

},

{

"type": "String",

"field": "primaryTitle",

"alias": "primaryTitle"

},

{

"type": "String",

"field": "originalTitle",

"alias": "originalTitle"

},

{

"type": "Boolean",

"field": "isAdult",

"alias": "isAdult"

},

{

"type": "Int",

"field": "startYear",

"alias": "startYear"

},

{

"type": "Int",

"field": "endYear",

"alias": "endYear"

},

{

"type": "Int",

"field": "runtimeMinutes",

"alias": "runtimeMinutes"

}

]

}

}

],

"edges": [

{

"label": "cast_and_crew",

"fromVertex": "title",

"toVertex": "person",

"tableSource": {

"catalog": "imdb",

"schema": "public",

"table": "title_principals"

},

"id": {

"fields": [

{

"alias": "id_title",

"field": "tconst",

"type": "String"

},

{

"alias": "id_order",

"field": "ordering",

"type": "Int"

}

]

},

"fromId":{

"fields": [

{

"type": "String",

"field": "tconst",

"alias": "from"

}

]

},

"toId":{

"fields": [

{

"type": "String",

"field": "nconst",

"alias": "to"

}

]

},

"attributes": [

{

"type": "Int",

"field": "ordering",

"alias": "ordering"

},

{

"type": "String",

"field": "category",

"alias": "category"

},

{

"type": "String",

"field": "job",

"alias": "job"

},

{

"type": "String",

"field": "characters",

"alias": "characters"

}

]

}

]

}

}PuppyGraph shows the schema visualization as follows:

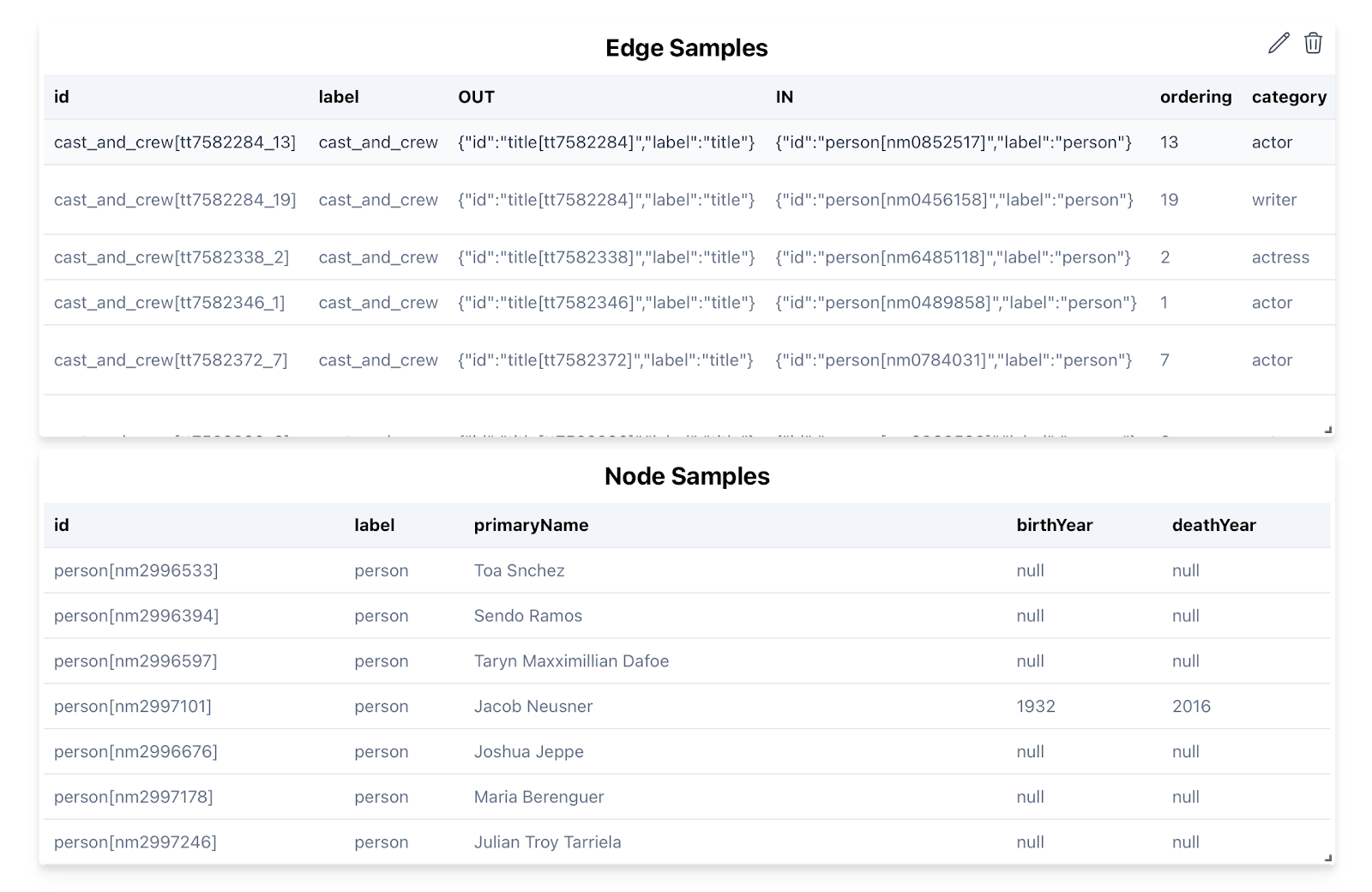

Also in the dashboard tab, we could see the edge and node examples as follows:

Now that our graph creation is finished. We will go to the actual meaty part to set up the GraphRAG agent using Python.

Setup the PuppyGraphClient

Setup the client with necessary credentials

from puppygraph import PuppyGraphClient, PuppyGraphHostConfig

client = PuppyGraphClient(

PuppyGraphHostConfig(

ip="localhost", username="puppygraph", password="puppygraph123"

)

)Querying the Graph

Then, we are able to query the graph using either Cypher or Gremlin via the client.

>>> client.gremlin_query("g.E().limit(1).elementMap()")

[{<T.id: 1>: 'cast_and_crew[tt5350914_8]', <T.label: 4>: 'cast_and_crew', <Direction.OUT: 'OUT'>: {<T.id: 1>: 'title[tt5350914]', <T.label: 4>: 'title'}, <Direction.IN: 'IN'>: {<T.id: 1>: 'person[nm1916604]', <T.label: 4>: 'person'}, 'ordering': 8, 'category': 'actor', 'job': None, 'characters': None}]

>>> client.cypher_query("MATCH ()-[r]->() RETURN properties(r) LIMIT 1")

[{'properties(r)': {'category': 'actor', 'characters': '["Cosmo"]', 'ordering': 13}}]Setup Query Tools

We setup tools that can be used by the GraphRAG agent

from langchain_core.tools import StructuredTool

from puppygraph import PuppyGraphClient

def _get_cypher_query_tool(puppy_graph_client: PuppyGraphClient):

"""Get the Cypher query tool."""

return StructuredTool.from_function(

func=puppy_graph_client.cypher_query,

name="query_graph_cypher",

description="Query the graph database using Cypher.",

args_schema=create_model(

"", query=(str, Field(description="The Cypher query to run"))

),

)

def _get_gremlin_query_tool(puppy_graph_client: PuppyGraphClient):

"""Get the Gremlin query tool."""

return StructuredTool.from_function(

func=puppy_graph_client.gremlin_query,

name="query_graph_gremlin",

description="Query the graph database using Gremlin.",

args_schema=create_model(

"", query=(str, Field(description="The Gremlin query to run"))

),

)(Note: These two tools are for demonstration purposes only. In reality, you don't have to define these tools explicitly, we've already defined these for you within the PuppyGraphAgent.)

Setup LLM

We use openai's GPT4o-2024-08-06 model :

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(

model="gpt-4o-2024-08-06",

temperature=0,

)Setup Prompts

We need to do some prompt engineering for LLM to understand the graph.

Setup Graph Schema Prompt

We first setup the graph schema prompt with semantic information around the node and edges and attributes of the graph.

GRAPH_SCHEMA_PROMPT = """

Nodes are the following:

- person:

properties:

- name: primaryName

type: String

description: The name of the person, as listed in the IMDb database.

- name: birthYear

type: Int

description: The birth year of the person (if available).

- name: deathYear

type: Int

description: The death year of the person (if available).

- title:

properties:

- name: titleType

type: String

description: The type/format of the title (e.g., movie, short, tvseries, tvepisode, video, etc.).

- name: primaryTitle

type: String

description: The more popular title or the title used by filmmakers on promotional materials at the point of release.

- name: originalTitle

type: String

description: The original title, in the original language.

- name: isAdult

type: Boolean

description: Indicates whether the title is for adults (1: adult title, 0: non-adult title).

- name: startYear

type: Int

description: Represents the release year of a title. For TV Series, this is the series start year.

- name: endYear

type: Int

description: For TV Series, this is the series end year. '\\N' for all other title types.

- name: runtimeMinutes

type: Int

description: The primary runtime of the title, in minutes.

Edges are the following:

- cast_and_crew:

from: title

to: person

properties:

- name: ordering

type: Int

description: A unique identifier for the row, used to determine the order of people associated with this title.

- name: category

type: String

description: The category of job that the person was in (e.g., actor, director).

- name: job

type: String

description: The specific job title if applicable, else '\\N'.

- name: characters

type: String

description: The name of the character played if applicable, else '\\N'.

The relationships are the following:

g.V().hasLabel('title').out('cast_and_crew').hasLabel('person'),

g.V().hasLabel('person').in('cast_and_crew').hasLabel('title'),

If filter by category, you must use outE() or inE(), because the category is stored in the EDGE properties.

"""Setup the agent prompt

We use a Chain-of-Thought technique to setup the agent prompt, asking the LLM to use think-plan-tool methodology to do the reasoning and generation.

from langchain_core.prompts.chat import ChatPromptTemplate, MessagesPlaceholder

chat_prompt_template = ChatPromptTemplate.from_messages(

[

(

"system",

"You are a helpful assistant to help answer user questions about imdb."

"You will need to use the information stored in the graph database to answer the user's questions."

"Here is some information about the graph database schema.\n"

f"{GRAPH_SCHEMA_PROMPT}",

),

(

"system",

"You must first output a PLAN, then you can use the PLAN to call the tools.\n"

"Each STEP of the PLAN should be corresponding to one or more function calls (but not less), either simple or complex.\n"

"Minimize the number of steps in the PLAN, but make sure the PLAN is workable.\n"

"Remember, each step can be converted to a Gremlin query, since Gremlin query can handle quite complex queries,"

"each step can be complex as well as long as it can be converted to a Gremlin query.",

),

MessagesPlaceholder(variable_name="message_history"),

(

"system",

"Always use the JSON format {\n"

"'THINKING': <the thought process in PLAIN TEXT>,"

"'PLAN': <the plan contains multiple steps in PLAIN TEXT, Your Original plan or Update plan after seeing some executed results>,"

f"'CONCLUSION': <Keep your conclusion simple and clear if you decide to conclude >",

),

],

template_format="jinja2",

)Note that “message_history” is a variable that is created and maintained by PuppyGraphAgent, so we need to make sure it is a placeholder within the prompt.

Combine everything together with PuppyGraphAgent

Now we've got the client, llm and prompt template, we can use the PuppyGraphAgent to combine different pieces together into the GraphRAG agent:

from puppygraph.rag import PuppyGraphAgent

pg_agent = PuppyGraphAgent(

puppy_graph_client=client,

llm=llm,

chat_prompt_template=chat_prompt_template

)Final Results

Now we can start by querying the agent and having a conversation with the agent

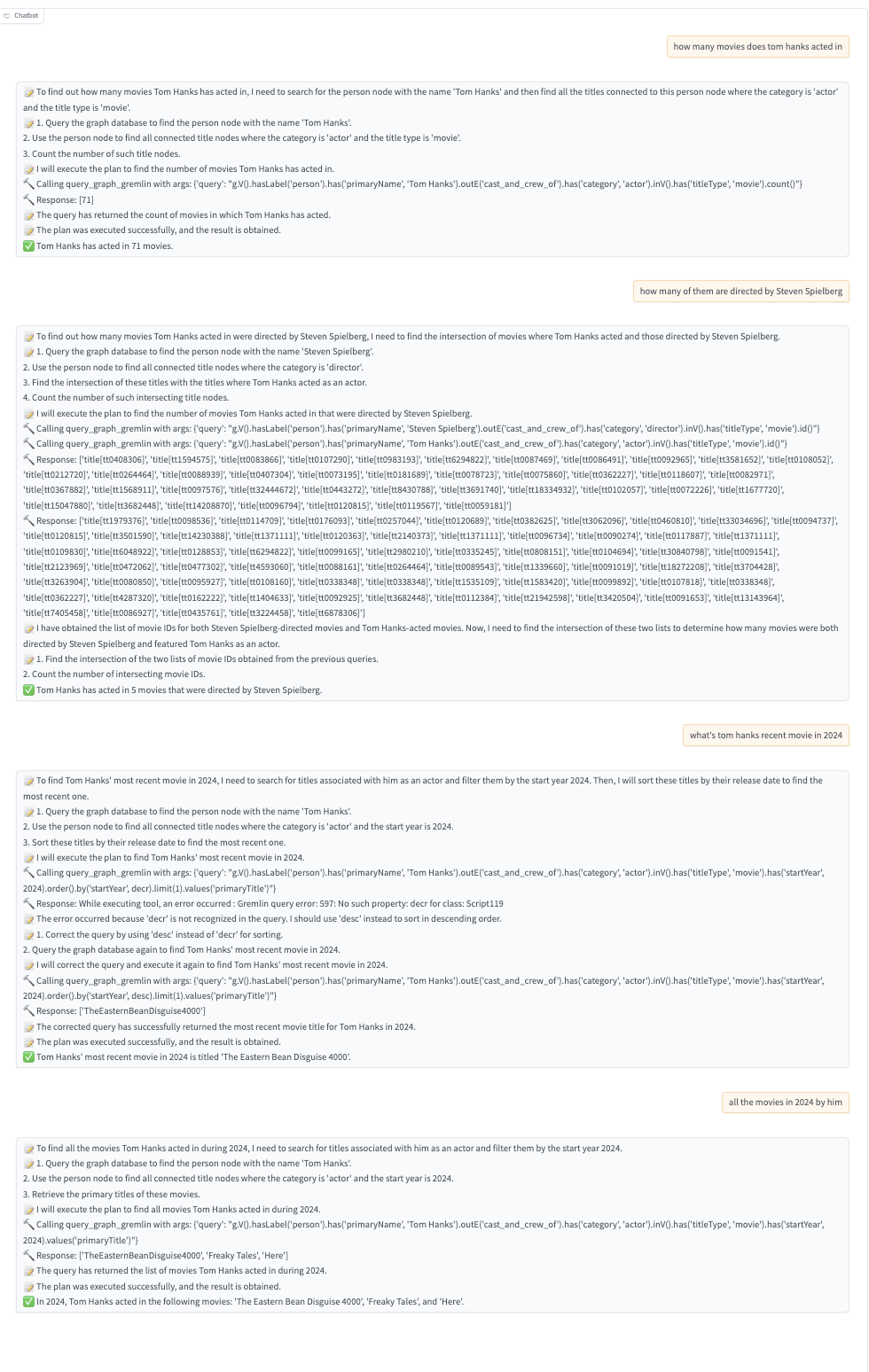

pg_agent.query("how many movies does Tom hanks acted in?")

pg_agent.query("how many of them are directed by Steven Spielberg?")

pg_agent.query("What's tom hanks recent movie in 2024?")

pg_agent.query("all movies in 2024 by him")

pg_agent.reset_messages()We can integrate our agent with Gradio, and here are some interesting results:

We can see that PuppyGraphAgent not only come up with the final result, but also, plans, reasons and sometimes doing multiple graph queries or correct the queries if the results are undesired as shown in the intermediate result. It really shows the potential of Agentic GraphRAG, where it is more dynamic and intelligent compared to the standard GraphRAG.

Further prompt engineering might be necessary to have a more robust GraphRAG agent, including few-shot examples, in which we aim to release in our next version.

Simplifying GraphRAG with PuppyGraph

PuppyGraph makes GraphRAG easier at every step of the process:

Graph Sourcing Stage: Forget about complicated data transfers or conversions—PuppyGraph’s Zero ETL approach means you connect directly to your data sources, whether it’s a data lake or a SQL database, without any hassle. Everything is treated as a unified graph, right from the start.

Graph Retrieval Stage: Whether you prefer Gremlin or Cypher, PuppyGraph has you covered with out-of-the-box support for both. You can choose the favourite query language that works best for you.

LLM Generation Stage: PuppyGraph isn’t just about retrieval—it provides PuppyGraphAgent, an Agentic GraphRAG framework. This means you can focus on the most important parts of your project, while PuppyGraph handles all the heavy lifting and the nitty-gritty details.

PuppyGraph streamlines every part of the GraphRAG process, so you can get the results you need without getting bogged down in the technical details.

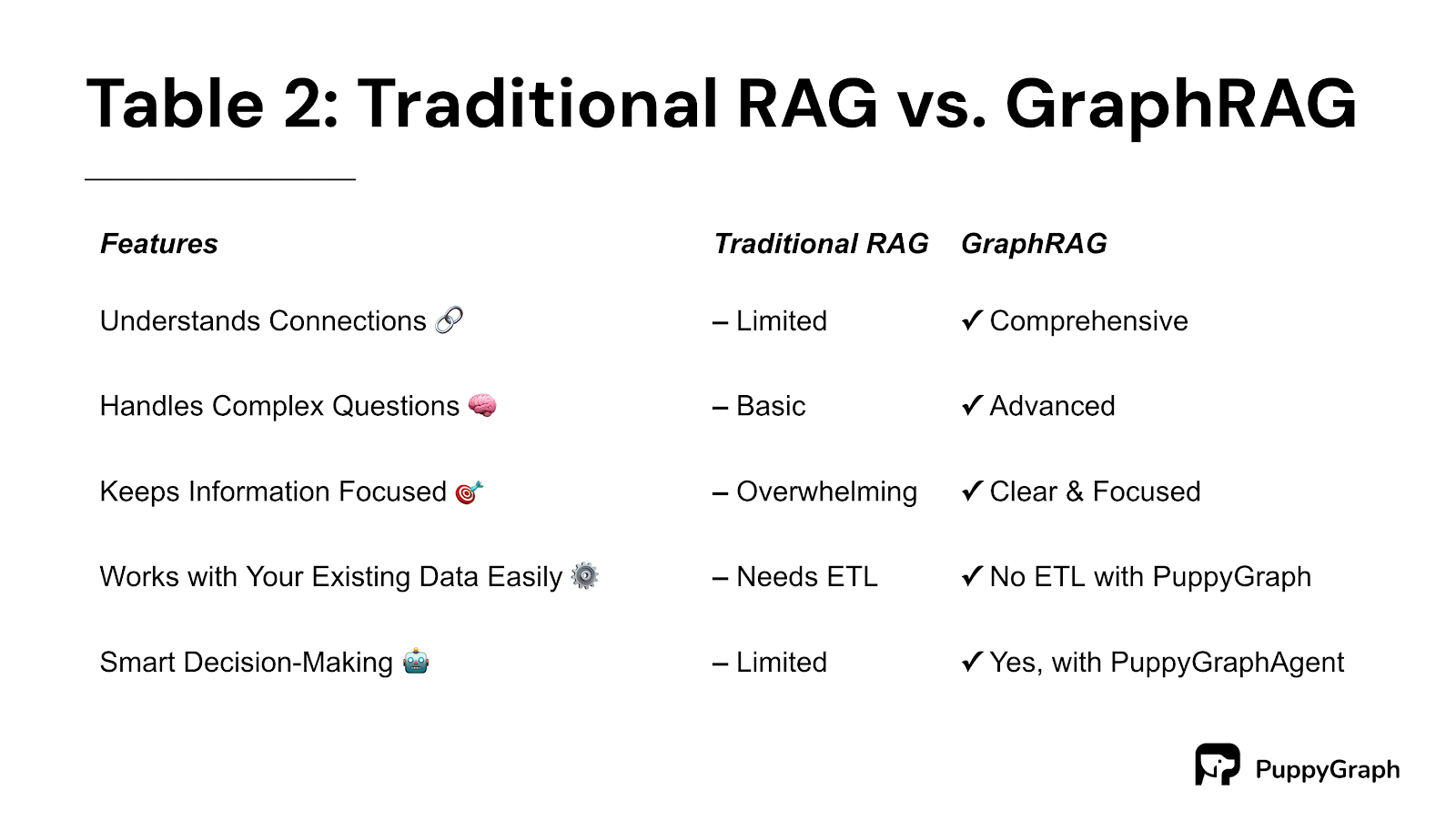

Advantages of GraphRAG

GraphRAG takes things up a notch compared to traditional RAG, offering several key advantages. Unlike traditional RAG, which pulls in isolated text, GraphRAG digs into the connections between pieces of information, giving you more accurate and meaningful answers. It excels at answering complex questions by understanding how different pieces of data relate, providing a more complete answer. Plus, GraphRAG focuses on the most relevant information, reducing the redundancy and overwhelming text that traditional RAG can produce. This ensures you get concise, targeted results without the extra noise.

Another major advantage is its flexibility in data integration. With PuppyGraph, you can easily map data from relational databases into a graph structure without needing complex ETL processes, making it simple to work with your existing data. Moreover, GraphRAG, especially with an agent framework like PuppyGraphAgent, doesn’t just retrieve information—it plans, adapts, and intelligently reasons through multiple queries to provide the best possible answers. In short, GraphRAG offers smarter, more connected answers, making it a powerful upgrade over traditional RAG.

Challenges in GraphRAG

GraphRAG is a game-changer, but it doesn’t come without its challenges. To really make the most of it, there are some significant hurdles to clear.

First, there’s the issue of complex knowledge graph creation. Building large-scale knowledge graphs from a mix of different data sources is no small feat. Typically, this requires extensive ETL (Extract, Transform, Load) processes, which can be time-consuming and resource-heavy. This complexity can slow down getting your GraphRAG up and running, making it harder to manage and update the graph data.

Next, scalability and latency issues become a real concern as your data grows to petabyte scale. Ensuring quick query responses while dealing with massive datasets, especially when you’re running complex, multi-hop queries, is tough. Many systems struggle with this, making it a key issue for any serious GraphRAG implementation.

Then, there’s the challenge of building intelligent, adaptive reasoning systems. As your graph expands, the number of potential subgraphs can skyrocket, making it harder to zero in on the most relevant ones without overwhelming the system. Plus, accurately matching a textual query to the right graph data isn’t easy. It requires advanced algorithms that can grasp both the text and the complex structure of the data, all while reasoning and replanning on the fly. Developing a system that can handle all this requires sophisticated, flexible frameworks.

These challenges are significant, but overcoming them is key to unlocking the full potential of GraphRAG in any application.

How PuppyGraph can help with GraphRAG

PuppyGraph brings some serious firepower to the table when it comes to GraphRAG. Here’s how:

Zero ETL for Hassle-Free Graph Creation: PuppyGraph connects directly to all your data sources without the need for complex ETL (Extract, Transform, Load) processes. It treats all your data as a unified graph without needing to migrate anything into a dedicated graph database. This makes it super easy to create large-scale knowledge graphs and run queries, getting your GraphRAG up and running in as little as 10 minutes.

Agentic GraphRAG: Traditional RAG just retrieves and generates, but PuppyGraph’s Agentic GraphRAG takes it further. It’s goal-driven, meaning it plans, runs multiple graph queries, and reasons intelligently based on the results. If needed, it can even re-plan or summarize to get the best possible answer. Plus, there is our open-source library, PuppyGraphAgent, to help you set this up.

Built-In Support for Gremlin & Cypher: With PuppyGraph, you’re not locked into one query language. It supports both Gremlin and Cypher, so your GraphRAG application can pick the best tool for the job—or use both! This flexibility boosts accuracy and tailors queries to fit the specific input, making sure you get the most desired results.

Petabyte Scale with Ultra-Low Latency: PuppyGraph is built to handle massive amounts of data—think petabyte scale—with lightning speed. Even complex queries, like a 10-hop neighbor search, take just 2.26 seconds. This means you can dig out even the most hidden insights from your vast datasets, kind of like finding a needle in a haystack but at super speed.

Conclusion

GraphRAG takes things up a notch by addressing the gaps in traditional RAG, focusing on how pieces of information connect to give you more accurate and insightful answers. It’s not just about pulling in facts—it’s about seeing how they fit together, which is key for handling complex questions. That said, making GraphRAG work comes with its own set of challenges. Building and managing large-scale knowledge graphs, dealing with scalability, and ensuring everything runs smoothly aren’t easy tasks. But with the right approach, these obstacles can be tackled, unlocking the full potential of what GraphRAG can offer.

Looking to the future, GraphRAG is poised to change the way we work with LLMs. As we continue to refine these methods, we’re on the brink of seeing even more powerful, connected, and insightful AI Agents. The future of AI is all about leveraging those connections, and GraphRAG is leading the charge toward a smarter, more integrated world of possibilities.

Get started with PuppyGraph!

Developer Edition

- Forever free

- Single noded

- Designed for proving your ideas

- Available via Docker install

Enterprise Edition

- 30-day free trial with full features

- Everything in developer edition & enterprise features

- Designed for production

- Available via AWS AMI & Docker install